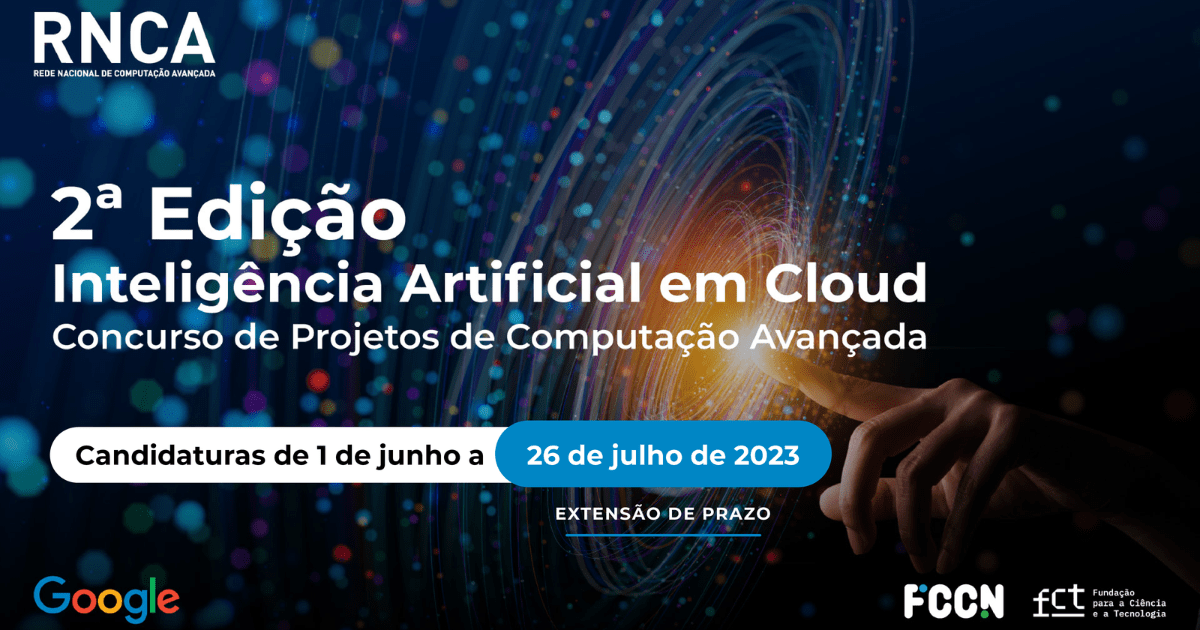

The project TrustAI4Sci, led by Cátia Pesquita, has been selected for funding under the FCT’s 2nd Advanced Computing Projects Call – AI on Google Cloud.

The project’s goal is to develop trustworthy, scientifically valid, and human-aligned Explainable Artificial Intelligence (XAI) approaches for impactful research in the life sciences. By incorporating scientific knowledge from Knowledge Graphs into data-driven explanations, TrustAI4Sci intends to go beyond simply explaining “how” decisions are made to elucidating “why” specific predictions occur. This approach seeks to bridge the gap between technical complexity and human understanding.

You can find additional details on the project here.